Points captured from the talk by Chip Huyen.

AI Engg -> process of building apps with foundational models

Foundation models -> coined in Stanford

AI Engg

1) Model as a service -> Anyone can use AI

2) Open-ended evaluations -> open ended responses, harder to evaluate

How to evaluate -> Comparative evals(chatbot arena), AI-as-a judge, 5 prompts to evaluate

Evaluation is a big challenge.

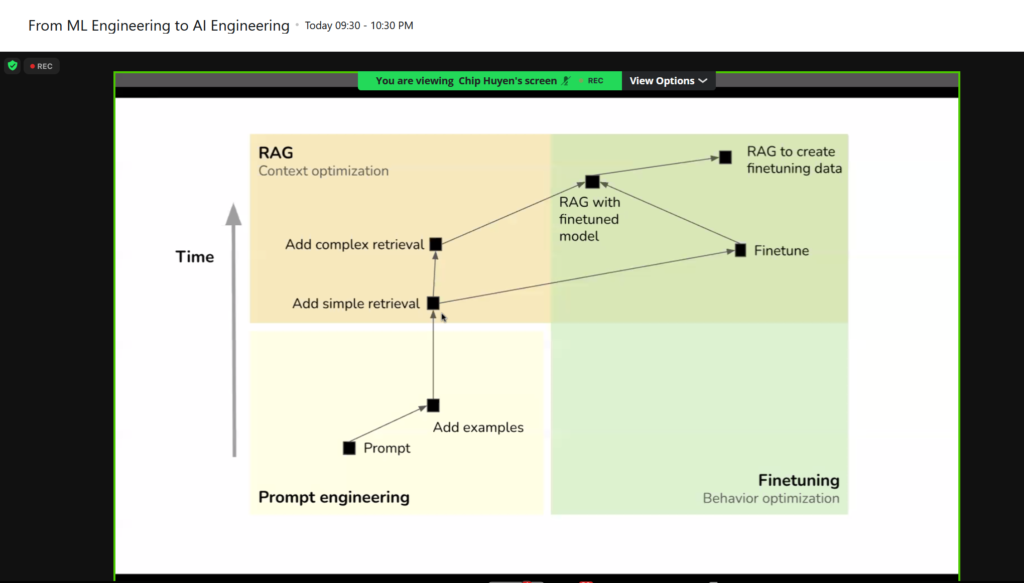

Feature engg -> context construction

Problem -> Hallucination. hallucinate less when lots of context provided to models

Retreival (RAG) -> BM25, ElasticSearch,Dense passage Retrieval, Vector DB ( compute intensive)

Future-> trying to build embeddings for table

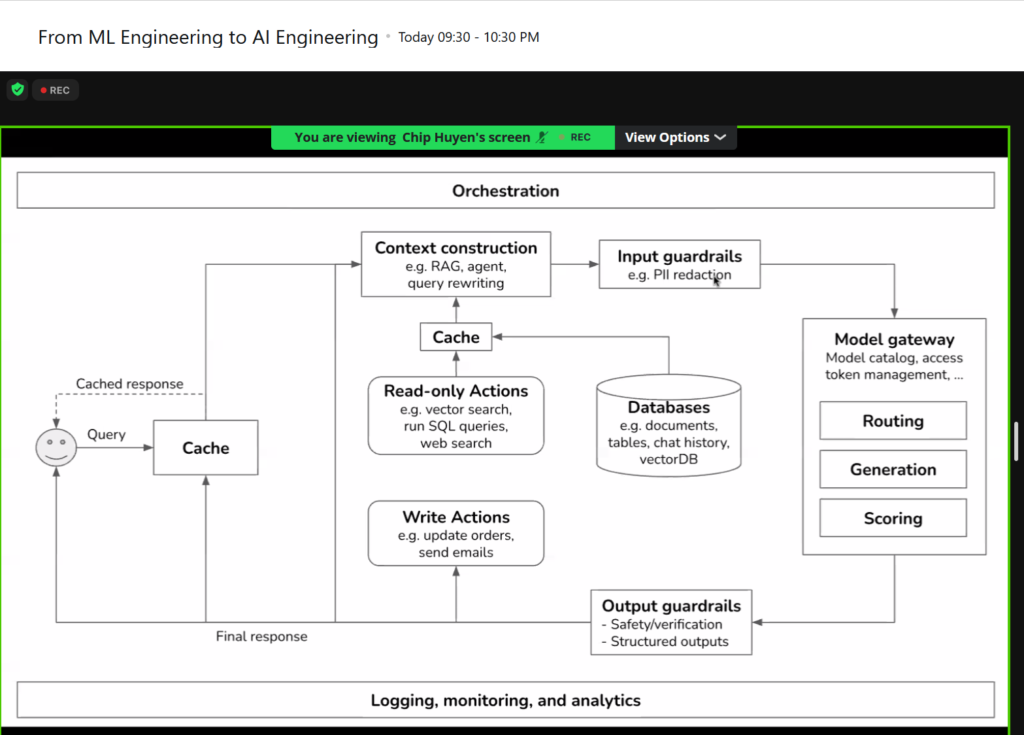

Agentic

Bigger size -> higher latency, expensive, requiring more expertise to host

Check this -> ????

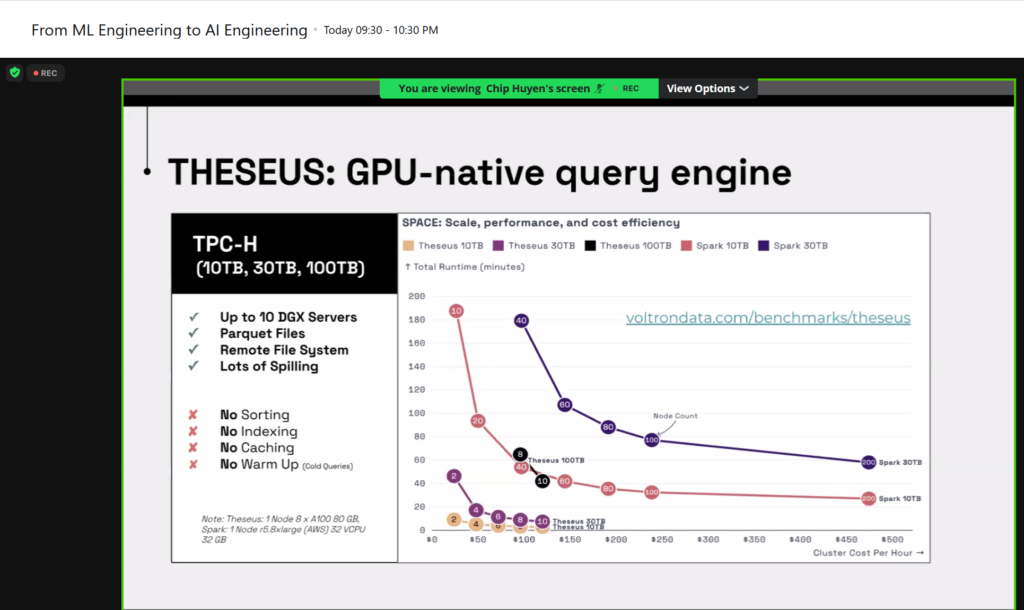

Inference optimization -> h/w algo, model arch

cache

param efficient finetuning

Topics to check

Apache arrow

Debugging gen ai apps

Distributed systems for LLM

Some snapshots of the presentation made by Chip.